Implementing the Modern Data Strategy: Microsoft Fabric Blueprint

Renat Zubayrov

Renat ZubayrovPart 2 of the Modern Data Strategy Series. The introductory post is here.

In our previous post, we established that the biggest barrier to Revenue AI is not the model—it is the data mess. We introduced the Medallion Architecture not just as a technical pattern, but as an operating model to manage complexity, enforce discipline, and build trust.

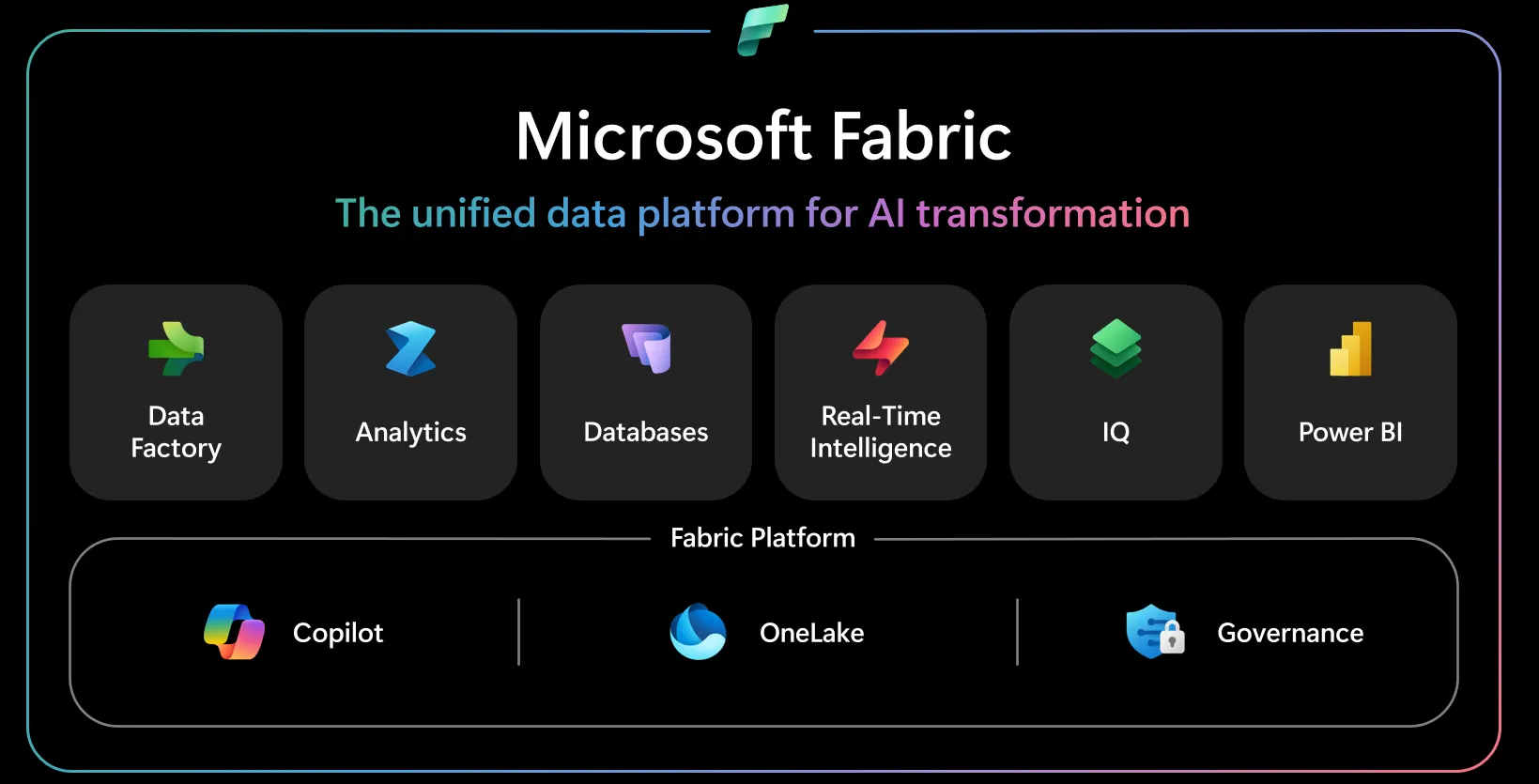

This installment turns strategy into execution. A fundamental shift to internalize upfront is that Fabric is SaaS, while much of the classic Azure data stack is PaaS—you provision and tune far less infrastructure, but you must operate within shared-capacity and platform guardrails. In return, Fabric integrates storage, compute, governance, and BI into one managed experience, so architecture decisions move from infrastructure wiring to workspace design, contracts, and operational discipline.

Below is a practitioner-focused blueprint for implementing Medallion Architecture on the Microsoft Fabric stack—emphasizing environment separation, deliberate schema governance, realistic capacity planning, and an architecture that remains operable as teams and workloads grow.

1. The Foundation: OneLake and Delta

Most data programs suffer from a "copy-storm": the same dataset is replicated across multiple workspaces, semantic models, and storage locations. Copies break lineage, create inconsistent numbers, and inflate cost.

Fabric’s anchor is OneLake, a tenant-wide data lake that acts as a shared storage plane for Fabric engines.

- Unified storage plane: Store data once and access it through multiple engines (Spark, T-SQL, KQL) without creating a second physical copy for each workload.

- Delta as the default table format: Fabric standardizes on Delta Lake, providing ACID transactions, schema enforcement, and time travel. For revenue and finance reporting, these properties are not "nice to have"—they are table stakes.

2. The Medallion Blueprint in Fabric

Medallion Architecture is not just about three folders named Bronze/Silver/Gold. The value comes from clear contracts: what each layer guarantees, what it does not guarantee, and who owns it.

In Fabric, the layers are typically realized via separate Lakehouses and/or Warehouses (and often separate workspaces) to enforce isolation, security boundaries, and CI/CD promotion.

Bronze: The Raw Landing Zone

Bronze is your audit trail. Data lands here as close as possible to what the source system emitted.

- Core rule: Bronze is immutable and append-only. No updates-in-place, no "cleanup" that loses the ability to replay history.

- Transformations: Keep them minimal. Acceptable examples include column-name normalization for technical safety, typing of ingestion metadata, and adding load identifiers.

- Replayability: Always preserve enough metadata to reprocess (ingestion timestamp, source extraction watermark, load/run ID, source file name, record hash if needed).

- Multiple Bronze workspaces (by domain such as CRM/ERP/Product, or by business unit), or

- Logical isolation per source/domain within a Bronze workspace (separate Lakehouses, separate folders/tables, separate security groups).

Silver: The Trust Layer

Silver is where raw data becomes fit for business use. This is where you standardize entities, enforce integrity, deduplicate, and align definitions.

- Primary goal: create governed, reusable entity tables (e.g., Customer, Deal, Invoice, Subscription) with consistent keys, typing, and history semantics.

- Data quality gates: apply deterministic checks (uniqueness, referential integrity where appropriate, nullability expectations, outlier rules) and route rejects/quarantine for investigation.

Fabric gives you multiple ways to implement Bronze → Silver:

- Spark-based ELT (Notebooks / Spark jobs): Prefer this when you have large datasets, semi-structured data (JSON), complex joins/transformations, heavy dedup logic, or you need scalable compute patterns.

- SQL-based ELT (Warehouse T-SQL, Lakehouse SQL endpoint, views): Prefer this when transformations are mostly relational, the team is SQL-strong, and you value simplicity and governance. SQL-only pipelines are often faster to build and easier to review—but can become limiting when data volume, semi-structured content, or transformation complexity increases.

Materialized Lakeviews

Materialized Lakeviews can reduce orchestration complexity by letting you declare transformation logic and have the system manage refresh. Treat them as an optimization lever—particularly useful for well-scoped, SQL-friendly transformations—but validate preview limitations and operational behavior (refresh semantics, monitoring, lineage) before standardizing on them for mission-critical pipelines.

Gold: The Business and Consumption Layer

Gold is optimized for consumption: star schemas, curated marts, and metrics defined centrally.

- Contract: Gold is the single source of truth for consumption, meaning business logic lives here—not hidden in individual Power BI files.

- Semantic model discipline: define measures once, define dimensional conformance once, and make it the shared metric layer for dashboards and downstream AI.

There is no universally correct choice. Use the engine that matches your workload and team.

- Warehouse (T-SQL): strong for structured BI, governance via explicit DDL, predictable relational modeling, and tighter control over schema and permissions. A good fit when BI is dominant and SQL governance matters.

- Lakehouse (Spark + SQL endpoint): strong for data engineering flexibility, Spark-native transformations, and unified handling of structured and semi-structured data. Often a better fit when engineering workloads dominate and you want end-to-end lakehouse patterns.

- Team skills (SQL-first vs Spark-first)

- Transformation complexity and data types (strictly relational vs mixed/semi-structured)

- Governance model (explicit DDL control vs flexible iterative modeling)

- Consumption needs (BI-first vs broader data/ML usage)

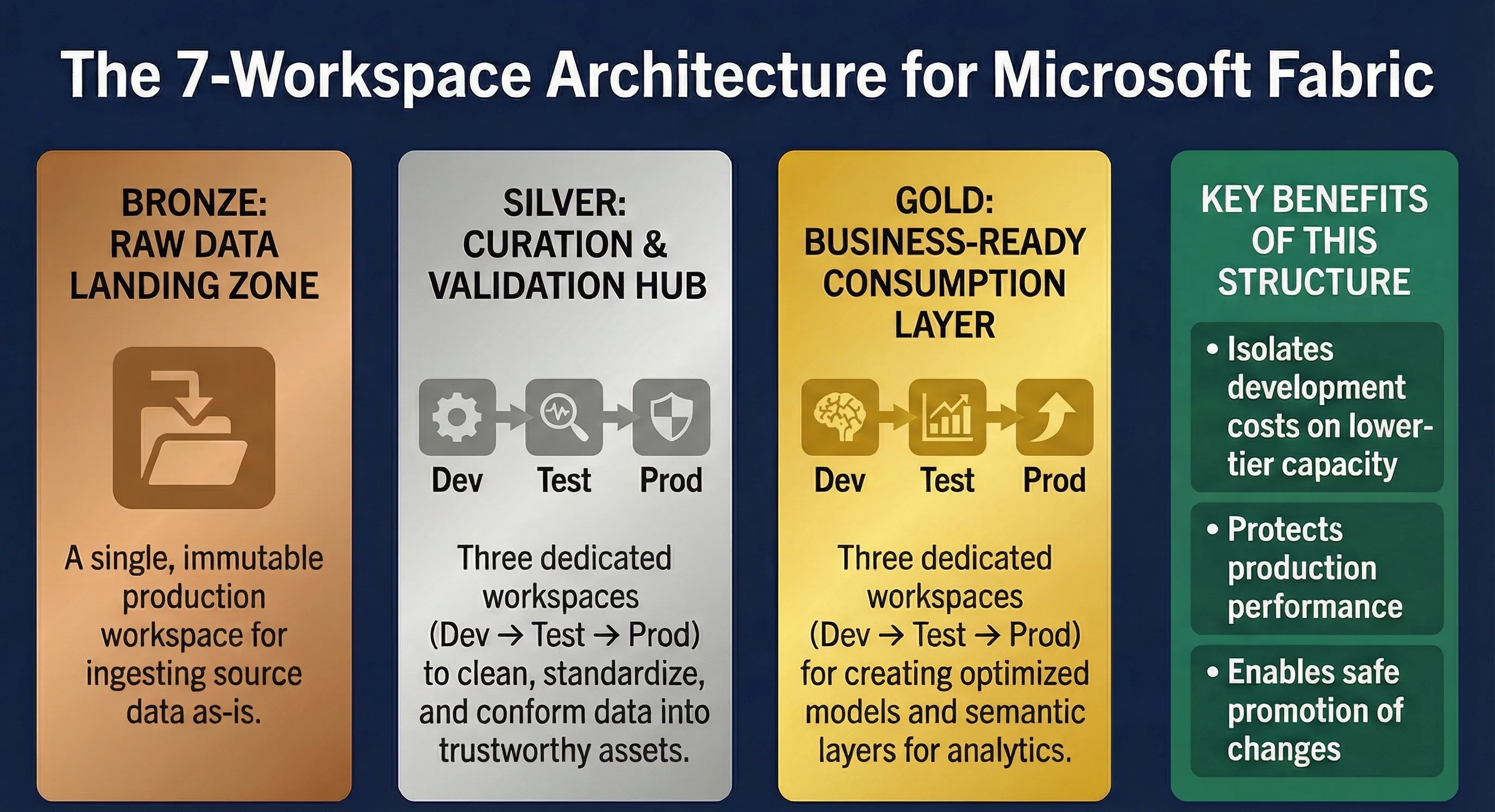

A modern data strategy treats data assets like software: you need controlled change, safe testing, and predictable releases.

Dev → Test → Prod separation (minimum viable)

At a minimum, implement Dev → Test → Prod for Silver and Gold.

This prevents:

- Resource contention: development experiments competing with production refresh and dashboard queries.

- Accidental impact on production dashboards: a single inefficient notebook or pipeline can throttle interactive BI.

- Risky schema changes: breaking downstream consumers by evolving tables without validation.

A strong operational baseline is to separate transformation and consumption ownership:

- Bronze/Silver = engineering workspaces (ingestion + curation)

- Gold = consumption workspaces (marts + semantic models + reports)

- Clear ownership and on-call responsibility

- Stronger security boundaries (raw data access vs curated consumption access)

- Reduced operational risk (changes in ETL do not directly disrupt BI artifacts)

Avoid a single "Bronze (Prod)" workspace becoming a shared playground. Instead:

- Keep production ingestion isolated, and

- Provide dev ingestion patterns either in a Dev Bronze workspace or via parameterized pipelines targeting dev storage locations.

- Bronze: Prod (and optionally Dev/Test by domain) or domain-isolated Bronze Lakehouses

- Silver: Dev / Test / Prod

- Gold: Dev / Test / Prod

- Optional Self-Service: separate workspace + capped capacity for exploratory BI

Fabric capacity is shared compute. Multiple engines (Spark, pipelines, SQL queries, semantic model refresh) can contend on the same capacity.

Why small capacities often fail in team settings

For individual experimentation, small SKUs may work. For team-based development, F2 is usually insufficient once you introduce:

- Spark notebooks

- concurrent pipelines

- multiple developers running queries and refreshes

Understanding contention behavior

Fabric uses capacity management mechanisms that can feel non-intuitive: intensive bursts can push the capacity into a state where subsequent workloads are slowed or throttled. The operational takeaway is simple:

- Isolate Dev from Prod so experimentation cannot degrade production dashboards.

- Schedule heavy workloads (batch refresh, large Spark jobs) to avoid peak BI usage.

- Monitor and iterate sizing—treat capacity as an operational SLO, not a one-time procurement decision.

5. CI/CD, Infrastructure as Code, and Notebooks

Fabric provides native Git integration and Deployment Pipelines to promote content across environments.

- IaC mindset: treat Lakehouses, Warehouses, notebooks, pipelines, and semantic models as versioned artifacts. Review changes in pull requests.

- Promotion discipline: use Deployment Pipelines to move from Dev → Test → Prod and to swap environment-specific parameters (connections, workspace IDs, endpoints).

Notebooks are often the most productive tool for complex transformations and debugging. They also introduce operational risks if teams do not enforce software engineering discipline.

Best practices:

- Keep notebooks modular (functions/utilities, clear entry points)

- Add basic testing/validation steps (row counts, expectations, schema checks)

- Enforce code review through Git PRs

- Separate exploratory notebooks from production pipelines (different folders, different policies)

6. Schema Evolution and Contract Management

Schema handling is where many Fabric implementations drift into brittleness.

Ingestion convenience vs governance

- "Merge schema" / automatic schema evolution can be convenient for ingestion, especially when sources add columns frequently.

- Explicit DDL (declared schemas, controlled changes) provides stronger governance, better predictability, and clearer contracts for downstream consumers.

- For volatile sources, allow additive column evolution in Bronze, but stabilize in Silver with explicit schemas.

- For governed domains (finance, revenue recognition), prefer explicit schema control earlier.

In Silver and Gold, treat tables as data products with consumers.

- Prefer additive-only changes (new columns, new tables) over breaking changes.

- If a breaking change is required, version it (e.g.,

revenue_v2,customer_status_v2) and run parallel for a migration window.

7. Governance, Naming, and AI Readiness

Governance is not just compliance—it is correctness at scale.

- Lineage and auditability: integrate with Microsoft Purview to trace KPIs from Gold back to Bronze.

- Naming conventions: use human-readable names in Gold and semantic models (e.g.,

Customer Name,Invoice Amount,Churn Risk Score). Avoid cryptic abbreviations. - Descriptions and metadata: add column and measure descriptions consistently.

- Better semantic naming and descriptions improve Copilot interactions, data agents, and future AI-assisted querying.

- Clear semantics reduce the risk of misinterpretation when users (or AI) explore the model without deep tribal knowledge.

You do not need a multi-year program to get value—but you also should not blindly copy a reference architecture.

Avoiding over-engineering

- Medallion is a pattern, not a law. Adapt it to your org size and maturity.

- Teams migrating from SQL Server should evaluate whether Spark/lakehouse complexity is justified for the current scope.

- If your workloads are small, relational, and BI-dominant, you may get most of the benefit with a SQL-governed Warehouse-centric approach.

- Weeks 1–2: Select 1–2 high-value decisions and define metrics and contracts.

- Weeks 3–6: Implement Bronze → Silver ingestion patterns with quality gates.

- Weeks 7–9: Build Gold marts and semantic models (Direct Lake where appropriate).

- Weeks 10–12: Harden with monitoring, CI/CD, and schema governance.

Implementing Medallion Architecture in Fabric is like organizing a professional kitchen:

- Bronze is the delivery dock where raw ingredients arrive.

- Silver is the prep station where ingredients are cleaned and standardized.

- Gold is the pass where finished dishes are plated for customers.

In the next post, we will explore how to implement a similar strategy using Databricks for teams requiring deeper engineering control.

Read more about revenue operations, growth strategies, and metrics in our blog and follow us on LinkedIn, Youtube, and Facebook.

← Go back to blog